DeepMind, the AI startup Google acquired in 2014, is probably best known for creating the first AI to beat a world champion at Go. So what do you do after mastering one of the world's most challenging board games? You tackle a complex video game. Specifically, DeepMind decided to write an AI to play the realtime strategy game StarCraft II.

StarCraft requires players to gather resources, build dozens of military units, and use them to try to destroy their opponents. StarCraft is particularly challenging for an AI because players must carry out long-term plans over several minutes of gameplay, tweaking them on the fly in the face of enemy counterattacks. DeepMind says that prior to its own effort, no one had come close to designing a StarCraft AI as good as the best human players.

Last Thursday, DeepMind announced a significant breakthrough. The company pitted its AI, dubbed AlphaStar, against two top StarCraft players—Dario "TLO" Wünsch and Grzegorz "MaNa" Komincz. AlphaStar won a five-game series against Wünsch 5-0, then beat Komincz 5-0, too.

AlphaStar may be the strongest StarCraft AI ever created. But it wasn't quite as big of an accomplishment as it might appear at first glance because it wasn't an entirely fair fight.

AlphaStar was trained using "up to 200 years" of virtual gameplay

DeepMind writes that "AlphaStar’s behavior is generated by a deep neural network that receives input data from the raw game interface (a list of units and their properties) and outputs a sequence of instructions that constitute an action within the game. More specifically, the neural network architecture applies a transformer torso to the units, combined with a deep LSTM core, an auto-regressive policy head with a pointer network, and a centralized value baseline."

I'll cop to not fully understanding what all of that means. DeepMind declined to talk to me for this story, and DeepMind has yet to release a forthcoming peer-reviewed paper explaining exactly how AlphaStar works. But DeepMind does explain in some detail how it trained its virtual StarCraft players to get better over time.

The process started by using supervised learning to help agents learn to mimic the strategies of human players. This reinforcement learning technique was sufficient to build a competent StarCraft II bot. DeepMind says that this initial agent "defeated the built-in Elite level AI—around gold level for a human player—in 95% of games."

DeepMind then branched this initial AI into multiple variants, each with a slightly different playing style. All of these agents were thrown into a virtual StarCraft league, with each agent playing others around the clock, learning from their mistakes, and evolving their strategies over time.

"To encourage diversity in the league, each agent has its own learning objective: for example, which competitors should this agent aim to beat, and any additional internal motivations that bias how the agent plays," DeepMind writes. "One agent may have an objective to beat one specific competitor, while another agent may have to beat a whole distribution of competitors, but do so by building more of a particular game unit."

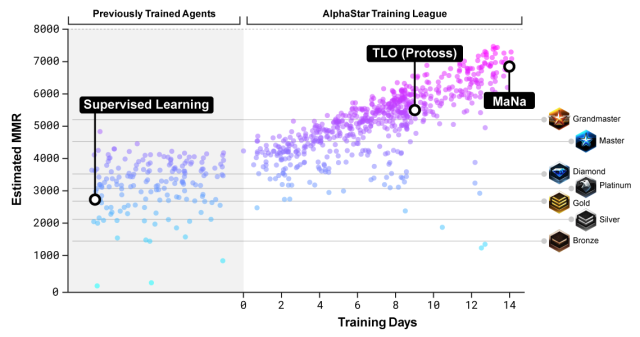

According to DeepMind, some agents gained the equivalent of 200 years of practice playing StarCraft against other agents. Over a two-week period, this Darwinian process improved the average skill of the agents dramatically:

At the end of this process, DeepMind selected five of the strongest agents from its virtual menagerie to face off against AlphaStar's human challengers. One consequence of this approach was that the human players faced a different opposing strategy in each game they played against AlphaStar.

AlphaStar had an unfair advantage in its initial games

Last week DeepMind invited two professional StarCraft players and announcers to provide commentary as they replayed some of AlphaStar's 10 games against Wünsch and Komincz. The commentators were blown away by AlphaStar's "micro" capabilities—the ability to make quick tactical decisions in the heat of battle.

This ability was most obvious in Game 4 of AlphaStar's series against Komincz. Komincz was the stronger of the two human players AlphaStar faced, and Game 4 was the closest Komincz came to winning during the five-game series. The climactic battle of the game pitted a Komincz army composed of several different unit types (mostly Immortals, Archons, and Zealots) against an AlphaStar army composed entirely of Stalkers.

Stalkers don't have particularly strong weapons and armor, so they'll generally lose against Immortals and Archons in a head-to-head fight. But Stalkers move fast, and they have a capability called "blink" that allows them to teleport a short distance.

That created an opportunity for AlphaStar: it could attack with a big group of Stalkers, have the front row of stalkers take some damage, and then blink them to the rear of the army before they got killed. Stalker shields gradually recharge, so by continuously rotating their troops, AlphaStar was able to do a lot of damage to the enemy while losing very few of its own units.

The downside of this approach is that it demands constant player attention. The player needs to monitor the health of Stalkers to figure out which ones need to blink away. And that can get tricky, because a StarCraft player often has a lot of other stuff on his plate—he needs to worry about building new units in his base, scouting for enemy bases, watching for enemy attacks, and so forth.

Commentators watching the climactic Game 4 battle between AlphaStar and Komincz marveled at AlphaStar's micro abilities.

"We keep seeing AlphaStar do that trick that you're talking about," said commentator Dan Stemkoski. AlphaStar would attack Komincz's units and "then blink away" before taking significant damage. "I feel like most pros would have lost all these stalkers by now," he added.

AlphaStar's performance was particularly impressive, because at some points he was using this tactic with multiple groups of Stalkers in different locations.

"It's incredibly difficult to do this in a game of StarCraft II, where you micro units on the south side of your screen, but at the same time you also have to do it on the north side," said commentator Kevin "RotterdaM" van der Kooi. "This is phenomenally good control."

"What's really kind of shocking about this is we went over the actions per minute, and it's not really that high," added Stemkoski. "It's an acceptable pro level of speed coming out of AlphaStar."

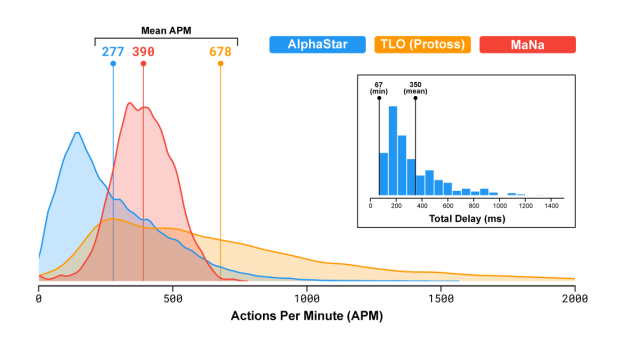

DeepMind produced a graphic that illustrates the point:

As this chart demonstrates, top StarCraft players can issue instructions to their units very quickly. Grzegorz "MaNa" Komincz averaged 390 actions per minute (more than six actions per second!) over the course of his games against AlphaStar. But of course, a computer program can easily issue thousands of actions per minute, allowing it to exert a level of control over its units that no human player could match.

reader comments

174