ORIGINALLY POSTED 18th September 2009

29,670 views on developerworks TOP 10 POST

I find I start so many posts these days describing how busy I’ve been as an excuse to why my post frequency has been lower than normal. This has to have been a new record as I spotted my last post was over a month ago. This time however I do have a much better excuse, two weeks time off away from everything to do with work, finding gold (you fool) within quartz rocks on the old tin mining coastline of North Cornwall and generally messing about with the kids in rock pools and the like – much more fun than analysing performance numbers, generating marketing information and briefing customers. No offense to anyone intended, but its good to switch off and spend the time with the family. I’m not even going to honour Hu with a link to his latest scare mongering post about SVC, but Martin deserves a link to his aptly titled “I spy FUD”. Oh and thanks to Chris Evans for his coverage of his visit to Hursley over on The Storage Architect – despite the dodgy photo of me, if I’d know I’d have dressed up a bit – hehe!

Since returning, and wishing I could just hit delete on the mountain of email that was waiting for me, I’ve spend more than my normal day or two with the suit on explaining to interested customers what SVC is, how it can help solve business issues and where the roadmap is leading. There is definitely more and more demand for storage virtualization, and with SVC being the market leader, that means more interesting business problems to solve and clients to talk to.

After explaining how SVC is deployed and how it can help to increase utilisation across heterogeneous SAN environments, improve performance and simplify day to day volume management, I like to take a step back and look at where we are in terms of traditional disk access density.

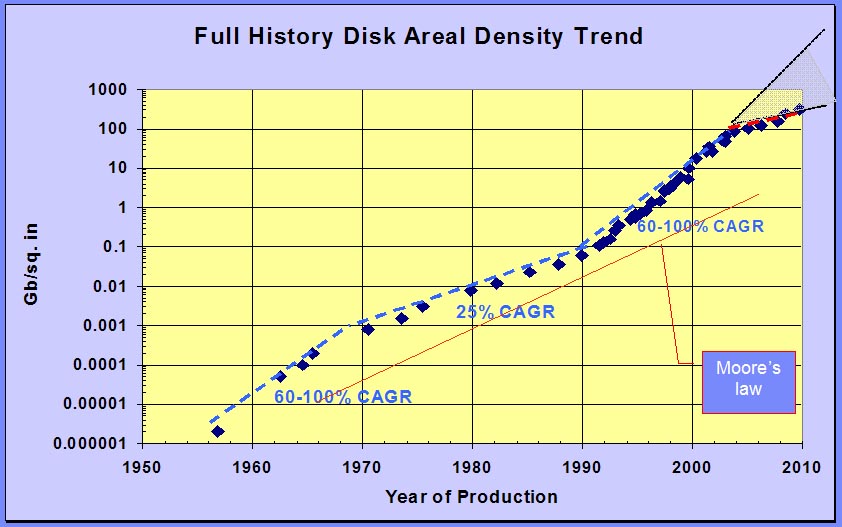

I covered this a year or two back, but its worth thinking about again. The graph below shows areal density plotted on a log scale vs time. (Click to view original size)

Source credits :

Disk drive evolution – “Technological impact of magnetic hard disk drives on storage systems”, Grochowski &

Halem, IBM Systems Journal Vol 42, number 2, 2003

Moore’s ‘law’ – “Cramming more components onto integrated circuits”, Gordon Moore, Electronics, Vol 38,

Number 8, April 19th 1965 [et seq.]

On the face of it there looks to have been four distinct phases. Starting back in 1958 with IBM’s invention of the Hard Disk increases in areal density were fairly easy to come by. You could almost see the tracks on the platter! Since users up till then had been used to tape and punched tape or cards, a ‘truely’ random access device, with seemingly huge (5MB) amounts of capacity was amazing. People were quickly hooked and the Hard Disk ‘drug’ sales continued unabated. Twice as much next year for half the cost, we’ll have some of that please.

Until around 1970 that is. Suddenly it wasn’t half the price next year, nor was it twice as much. Compound grow rates dropped to around 25% per annum. Something had to be done, as demand was still growing as people had got used to simply ‘using’ the storage. The idea of managing it and optimising utilisation weren’t even considered. In the early 1970’s IBM introduced a lot of what System Z users today take for granted, infact if it weren’t for these developments we wouldn’t have things like VMware today. Yes, virtualization was introduced and the disciplins this brought meant that today we enjoy more that 90% storage utilisation in most mainframe environments.

1990 saw the mainstream introduction of the GMR head. Now we are back on track, with 60-100% compound annual growth, and during the same decade we saw a huge boom in the open systems market, Unix, Windows and latterly Linux saw huge demand for client server solutions and SANs. Of course none of these systems had or have the benefit of the disciplines of mainframe storage management, and so as demand increase, areal density continued to drive the costs down with capacity doubling. We (vendors) got these new users ‘hooked’ on the disk ‘drug’ too.

Around 1995 the folks in IBM research hadn’t found the ‘next big thing’ for disks, Despite selling off the commodity drive manufacturing business, IBM actively researches the area. The kink in the curve was again looming. History always repeats, and so seeing this slow down coming, we looked back to see, what did we do last time? Yup, Storage Virtualization. [Aside] At this point in the briefing, having already explained to our clients how SVC can help to essentially drive up utilisation, add discipline back to storage and all the other good things – the penny usually drops.

Around 1999 a project under the name of COMPASS (Commodity Parts Storage Subsystem) was also kicking around the ivory towers of Almaden Research, where a couple of members of the Hursley storage team were on assignment. When they came back, they brought the foundation architecture that would become SVC. As you can see in 2003 yet again compound growth slowed down to 25% again.

Another interesting side note can be seen when you add the areal density of silicon, which to this day has tracked almost scarily to Moores Law. If for example, the GMR head had not been invented by Stuart Parkin, then we’d probably have had mainstream solid state drives in the mid 90’s. If nothing else comes along to push spinning rust back to the heady days of 65-100% CAGR, then by 2015 solid state density will overtake magnetic density – in a bits per square inch term.

My thoughts are that flash is not a long term replacement for spinning disks. Its a start, but I doubt if we will be sitting here in 50 years plotting the same picture of NAND based flash drives. I suspect something better will come along before long, and it will be the next replacement and the next and the next… Solid state as we know it today has, if you like, opened the flood gates, and I look forward to experimenting with whatever comes next.

Leave a comment